|

I'm a computer scientist / engineer interested in robotics, machine learning, and scientific sensors (especially when applied to earth science). Much of my research is about learning models of the physical world for use with interactive agents. I am currently a Senior Staff Research Scientist at Boston Dynamics researching learned control for humanoid robots. Previously, I worked on a PhD at McGill University in the Mobile Robotics Lab and Mila with Greg Dudek. During grad school, I worked part-time as a research intern at the Samsung AI Center (SAIC) in Montreal where I focused on dexturous manipulation with visuotactile sensors. In the summer of 2019, I interned at JPL and looked at machine vision aspects of the Mars Sample Return Mission. Prior to starting graduate school, I was an at-sea engineer for the autonomous underwater vehicle, Sentry, in the National Deep Submergence Facility at WHOI and an engineer working on applied sensing at SWRI.

Email /

CV /

Bio /

Google Scholar /

Twitter /

Github

|

|

|

|

|

|

Johanna Hansen*, Kyle Kastner*, Yuying Huang, Aaron Courville, Dave Meger, and Gregory Dudek This paper introduces a pixel-based actor-critic architecture featuring a differentiable Denavit-Hartenburg (DH) forward kinematics function in the critic sub-network, which achieves substantial improvement in average cumulative reward across several complex manipulation tasks and two robot arms in Robosuite, compared to strong baselines. Forward kinematics as described by DH parameterization for rigid-body robots is fully differentiable with respect to input joint angles, given fixed link-relative geometric information, and including this differentiable module improves training of reinforcement learning agents on an array of benchmark manipulation tasks. We show the importance of formulating a differentiable kinematic function for overall task performance in an ablation study, and demonstrate a simulation-learned policy running on a real Jaco 7DOF robot. |

|

Johanna Hansen, Travis Manderson, Greg Dudek Despite precise weather forecasting, satellite observations, and marine instrumentation of much of the ocean we know little about how currents move on the surface of the ocean. In this ongoing research effort, developed for the DARPA Forecasting Floats in Turbulence (FFT) Challenge, we predict float trajectories for 10 days in the future using a combination of physics models of the ocean, weather predictions, and transfer learning from past float trajectories (5th place in global competition). |

|

Johanna Hansen*, Melissa Mozifian*, and Sahand Rezaei-Shoshtari The goal of this project is to build a sim2real and transfer learning interface for the Jaco robot by Kinova. We have transfer frameworks which work with both DM Control Suite and Robosuite. Ongoing projects which utilize these tools include: Intervention Design for Effective Sim2Real Transfer (IBIT) and Transfer Learning with Differentiable Physics-Informed Priors. Docker / ROS Interface / RL Example / IBIT / Robosuite |

|

Johanna Hansen and Kyle Kastner In this project, we investigate planning on imagined futures which consist of learned discrete states. We achieve scores of +30 on the hard exploration task of Freeway with a pre-trained world model. In the thumbnail, we show our agent with GradCam on the agent. Notice how nearby cars influence actions and the gradient. Ongoing work which builds on the following research threads of exploration and associative generative modeling. |

|

Johanna Hansen and Kyle Kastner In the interest of learning more about exploration in reinforcement learning, we set out to implement two papers on exploration by Ian Osband et al.: Deep Exploration via Bootstrap DQN and Randomized Prior Functions for Deep Reinforcement Learning. We evaluate our implementation in the Atari framework and investigate a model-based and double-Q learning version of the work, matching SOTA results. Ongoing work. |

|

Johanna Hansen* and Kyle Kastner* In this generative modeling project, we implement Associative Compression Networks for Representation Learning (ACN) by Graves, Menick, and van den Oord. We also introduced a VQ-VAE style decoder to the ACN model for discrete latents and call this architecture ACN-VQ. In the thumbnail, we show how to encode an example from the validation set (leftmost image) and find its nearest neighbors (right columns) according to the learned ACN model. Ongoing work to apply this in exploration for reinforcement learning settings. |

|

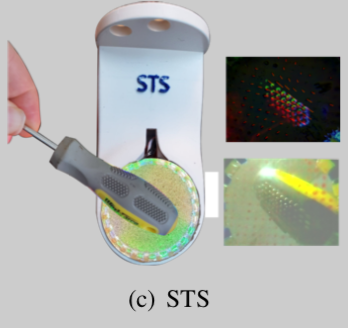

Johanna Hansen, Francois Hogan, Dmitriy Rivkin, David Meger, Michael Jenkin, and Gregory Dudek Presented at ICRA 2022, Philadelphia, PA Manipulating objects with dexterity requires timely feedback that simultaneously leverages the senses of vision and touch. In this paper, we focus on the problem setting where both visual and tactile sensors provide pixel-level feedback for Visuotactile reinforcement learning agents. We investigate the challenges associated with multimodal learning and propose several improvements to existing RL methods; including tactile gating, tactile data augmentation, and visual degradation. When compared with visual-only and tactile-only baselines, our Visuotactile-RL agents showcase (1) significant improvements in contact-rich tasks; (2) improved robustness to visual changes (lighting/camera view) in the workspace; and (3) resilience to physical changes in the task environment (weight/friction of objects). |

|

Yuying (Blair) Huang, Yiming Yao, Johanna Hansen , Jeremy Mallette, Sandeep Manjanna, Greg Dudek, and David Meger Selected for Poster Competition (Top ~10% Student Paper) at OCEANS 2021, San Diego This paper presents the portable autonomous probing system (APS), a low-cost robotic design for collecting water quality measurements at targeted depths from an autonomous surface vehicle (ASV). This system fills an important, but often overlooked niche in marine sampling by enabling mobile sensor observations throughout the near-surface water column without the need for advanced underwater equipment. |

|

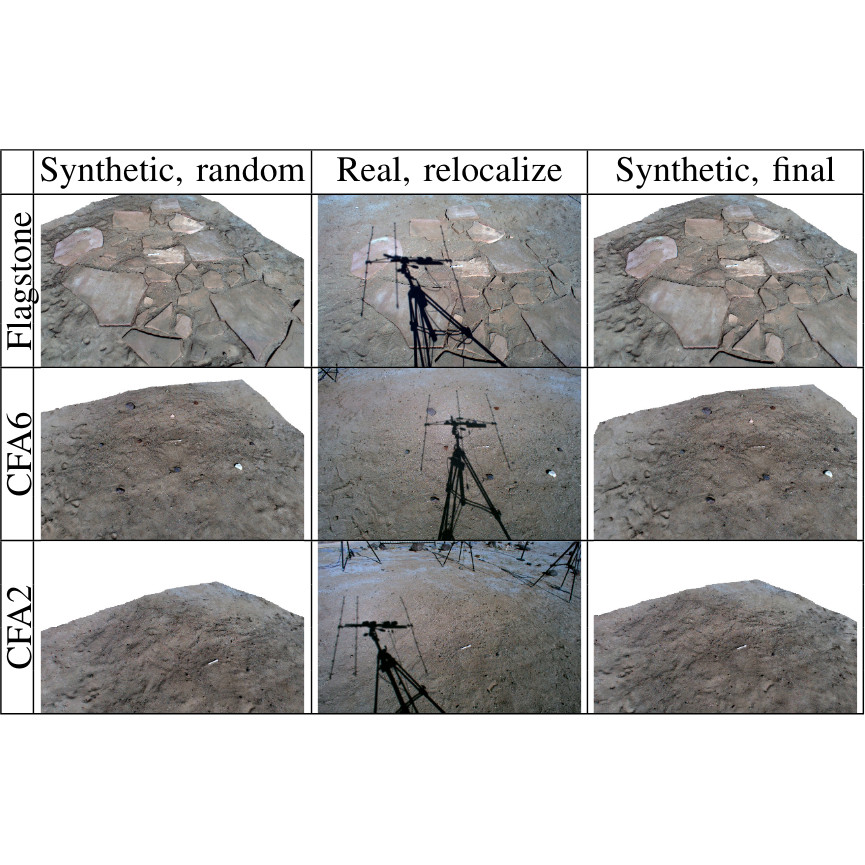

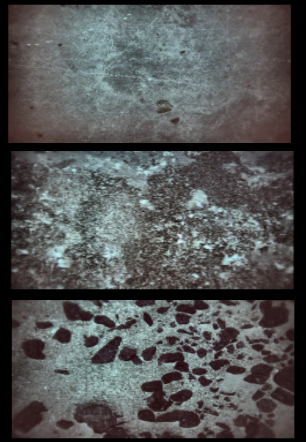

Tu-Hoa Pham, William Seto, Shreyansh Daftry, Barry Ridge, Johanna Hansen , Tristan Thrush, Mark Van der Merwe, Gerard Maggiolino, Alexander Brinkman, John Mayo, Yang Cheng, Curtis Padgett, Eric Kulczycki, Renaud Detry Accepted to IEEE Robotics and Automation Letters, 2021

We consider the problem of rover relocalization in the context of the notional Mars Sample Return campaign. In this campaign, a rover (R1) needs to be capable of autonomously navigating and localizing itself within an area of approximately 50 × 50 m using reference images collected years earlier by another rover (R0). We propose a visual localizer that exhibits robustness to the relatively barren terrain that we expect to find in relevant areas, and to large lighting and viewpoint differences between R0 and R1. |

|

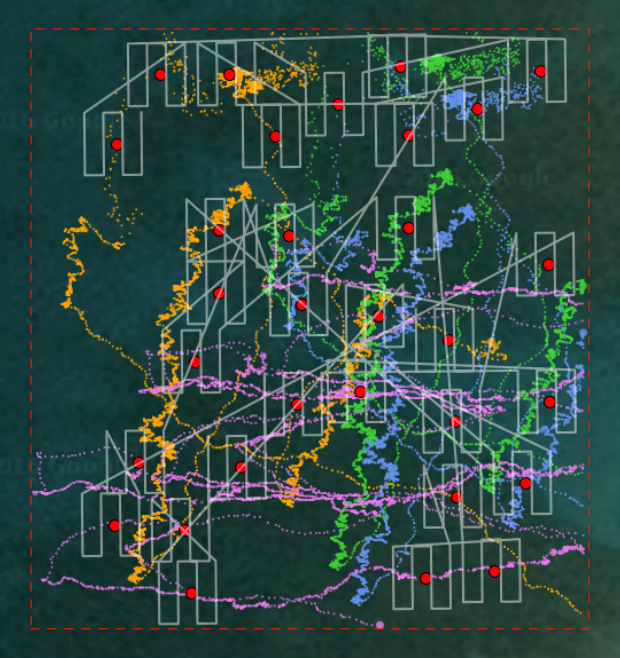

Johanna Hansen, Sandeep Manjanna, Alberto Quattrini Li, Ioannis Rekleitis, Gregory Dudek Selected for Poster Competition (Top ~10% Student Paper) at OCEANS 2018, Charleston We present a transportable system for ocean observations in which a small autonomous surface vehicle (ASV) adaptively collects spatially diverse samples with aid from a team of inexpensive, passive floating sensors known as drifters. Drifters can provide an increase in spatial coverage at little cost as they are propelled about the survey area by the ambient flow field instead of with actuators. Our iterative planning approach demonstrates how we can use the ASV to strategically deploy drifters into points of the flow field for high expected information gain, while also adaptively sampling the space. |

|

Johanna Hansen*, Kyle Kastner*, Aaron Courville, and Greg Dudek Presented in the Prediction and Generative Modeling in Reinforcement Learning workshop at ICML 2018. We introduce MCTS planning over learned discrete latent states with conditional autoregressive forward planning. This work demonstrates an agent solving a complicated planning problem with search using purely learned models. Long-term search on rollouts is enabled via a highly-accurate PixelCNN forward model and exact matching between states represented with VQ-VAE discrete latents. |

|

Johanna Hansen and Greg Dudek Presented at IROS 2018 This paper considers a spatial coverage problem in which a network of passive floating sensors is used to collect samples in a body of water. We employ an iterative measurement and modeling scheme to incrementally deploy sensors so as to achieve spatial coverage, despite only controlling the initial sample point. Once deployed, sensors are moved about a survey area by ambient surface currents. |

|

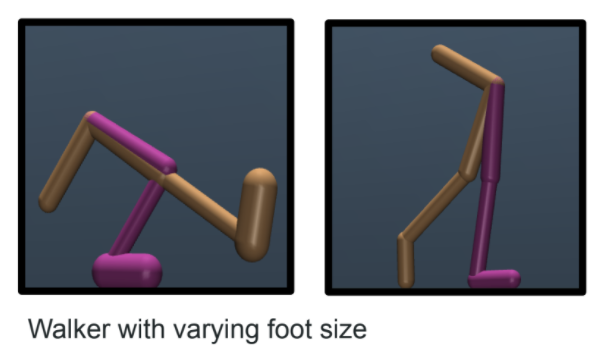

Peter Henderson, Wei-Di Chang, Florian Shkurti, Johanna Hansen, David Meger, Gregory Dudek Presented at the Lifelong Learning Workshop at ICML 2017 This work presents a multitask simulation environment based on OpenAI Gym. We run a simple baseline using Trust Region Policy Optimization and release the framework publicly for the systematic comparison of multitask, transfer, and lifelong learning in continuous domains. |

|

Sandeep Manjanna, Johanna Hansen, Alberto Quattrini Li, Ioannis Rekleitis, Gregory Dudek Presented at the Computer Robot Vision (CRV) Conference in 2017 We present a method to strategically sample locally observable features using two classes of sensor platforms. Our system consists of a sophisticated autonomous surface vehicle (ASV) which strategically samples based on information provided by a team of inexpensive sensor nodes. The sensor nodes effectively extend the observational capabilities of the vehicle by capturing georeferenced samples from disparate and moving points across the region. The ASV uses this information, along with its own observations, to plan a path so as to sample points which it expects to be particularly informative. |

|

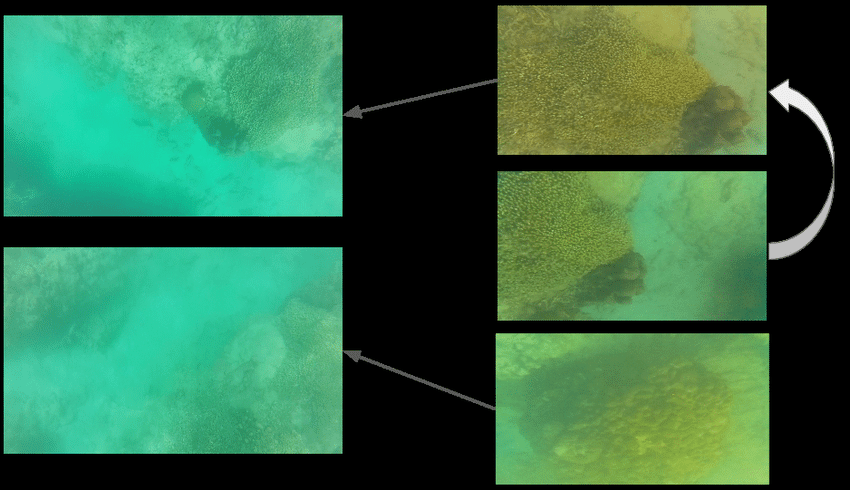

Alberto Quattrini Li, Ioannis Rekleitis, Sandeep Manjanna, Nikhil Kakodkar, Johanna Hansen, Gregory Dudek, Leonardo Bobadilla, Jacob Anderson, Ryan N. Smith Presented at the International Symposium on Experimental Robotics (ISER 2016) This paper presents experimental insights from the deployment of an ensemble of heterogeneous autonomous sensor systems over a shallow coral reef. Visual, inertial, GPS, and ultrasonic data collected are compared and correlated to produce a comprehensive view of the health of the coral reef. Coverage strategies are discussed with a focus on the use of informed decisions to maximize the information collected during a fixed period of time. |

|

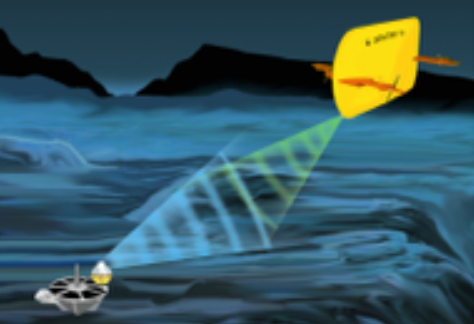

Johanna Hansen, Dehann Fourie, James Kinsey, Clifford Pontbriand, John Ware, Norm Farr, Carl Kaiser, Maurice Tivey Presented at OCEANS 2015 The emergence of high speed optical communication systems has introduced a method for transferring data relatively quickly underwater. This technology coupled with autonomous underwater vehicles (AUVs) has the potential to enable efficient wireless data transfers underwater. Data muling, a data transport mechanism in which AUVs visit remote sensor nodes to transfer data, enables remote data to be recovered cheaply from underwater sensors. This paper details efforts to develop a system to reduce operational complexities of autonomous data-muling. We report a set of algorithms, systems, and experimental results of a technique to localize a sub-sea sensor node equipped with acoustic and optical communication devices with an AUV. |

|

Johanna Hansen, Greg Willden, Ben Abbott, and Ronald Green Presented at the Transportation Research Board (TRB) Meeting, 2014 Southwest Research Institute® (SwRI) and the Federal Highway Administration (FHWA) teamed to create a low-cost sensor to inspect in-service culverts in both wet and dry conditions. This sensor system, called the Ultrasonic Culvert Inspection System (UCIS) is suitable for mapping, monitoring, and diagnosing damage to roadway culverts using sonar mapping and live video. The sensor allows the inspector to evaluate culverts with minimal time, equipment, and cost to the sponsoring agency. Sonar information, collected as the probe travels through the culvert, can be combined with inertial measurement and distance data to produce a three-dimensional representation of the culvert that can be manipulated and viewed from many angles. A specially calibrated sonar scheme allows the sensor to be developed with inexpensive components, making this system appropriate for use in high-risk, flooded inspections. |

|

Johanna Hansen and JPL Vision Team We worked with Mars geology specialists to develop a realistic visual dataset of sample return tubes. This dataset development campaign, which we undertook over multiple weeks, utilized a Vicon tracking system for tube localization under various natural illumination settings and rock formations. |

|

Johanna Hansen, Tu-Hoa Pham, and Renaud Detry NASA is studying concepts for returning physical samples from Mars. One notional aspect of such a campaign would involve a rover that would retrieve tubes containing samples of the Martian terrain that were stored on the surface of Mars in a previous mission. In this work, we consider the problem of developing a vision-based sample tube re-localization capability to facilitate autonomous sample tube grasping and retrieval. Broadly, there are two algorithmic approaches or experts we consider for the task of re-localization. The terrain-relative expert finds a geometric transformation between images collected during sample tube deployment and the rover’s current observations, allowing it to find the tube based on human annotations of the deployment image. The direct expert localizes the tube using only current observations. These two experts generally have orthogonal performance cases, with terrain-relative methods failing under large changes in viewpoint, scale, or illumination and direct methods unable to succeed under tube occlusion. Our work seeks to learn to exploit these performance differences by building several experts of each class and a meta-model which learns to identify the most performant expert for a given sample-tube collection assignment. Ideally, our meta-model will be lightweight, robust, and reduce computational cost by allowing us to contextually execute experts. In this report, we demonstrate performance in a variety of state-of-the-art experts and present results with ground-truth localization. In addition, we provide initial meta-modeling results that give insight into the direction of future work on the topic. |

|

Johanna Hansen, Riwan Leroux, and Andrea Bertolo The goal of this project was to build a tool to easily classify and detect zooplankton in Canadian Lakes. We trained a hierarchical ResNet classifier capable of handling this unbalanced dataset and tuned an ACN to enable robust pixel-based nearest-neighbor lookups to assist in labeling new species. |

|

Johanna Hansen, Mingxi Zhou, and Ralf Bachmayer This work sought to understand how icebergs change shape over time. Over a series of days, we collected both acoustic observations from under the water via an underwater glider and unstructured images from the part of the iceberg visible above the water. I trained a pix2pix model to segment the iceberg images from limited labels and then used standard SFM tools to create a 3D volume estimate of the air-exposed portion of the iceberg. Later the air and water estimates were stitched together to estimate iceberg volume/>. |

|

Johanna Hansen, Elisa Ferreira, Juliette Lavoie, Julie Tseng, Jasmine Wang In this AI4Good hackathon project, we sought to understand how Montreal's bikesharing program influenced transportation, particularly in low-income comminities. We show how reliable bike/station availability can increase the likelihood of a neighborhood's residents to commute by bike. |

|

|

|

|

ICLR 2020 and NeurIPS 2020 Organizer for the AI4Earth machine learning workshop. This workshop highlights work being done at the intersection of AI and the Earth and Space Science. In 2020, I organized the "Sensors and Sampling" track and managed the website. |

|

Differentiable vision, graphics, and physics in machine learning (DiffCVGP) Organizer

NeurIPS 2020 Organizer for the DiffCVGP workshop and facilitator of the Mentorship Program and Gather.town Poster Session. This workshop brings together research in vision, graphics, and physics where we can explicitly encode our knowledge of the rules of the world in the form of differentiable programs. (Thumbnail courtesy of PhiFlow, which was one of my favourite papers presented at the workshop.) |

|

Informed Scientific Sampling in Large-scale Outdoor Environments (ISSLOE) Organizer

IROS 2019 Organizer for the ISSLOE workshop at IROS 2019 where we discussed recent progress in the field of robotic sampling for monitoring large-scale outdoor environments such as forests, bodies of water, agricultural fields, and complex urban settings. Thumbnail is from the amazing invited talk from Thomas Stastny titled "Monitoring Glaciers Beyond the Horizon". |

|

|

|

|

|

|

|

|

|

|

McGill Reading Group 2016: Deep-Desc |

|

SciPy 2015 Talk: Seafloor Characterization with Python as a Toolbox |

|

This website template was stolen from Jon Barron using this source code. Others who use this code are linked here:

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

✩

|